How To Access and Display Depth Information as a Shader

| Working with images that are related to the camera in an augmented reality application, usually includes working with a transformation matrix paired with the image. The reason for this is because the aspect ratio of a camera image is not guaranteed to match the aspect ratio of the application's viewport. In most cases the viewport covers the entire screen of the device that runs the application. In order to avoid distorting the image, it needs to be cropped, padded or even rotated before displaying it. For the AR background image, the transformations mentioned above are performed via the display matrix. This matrix can be used for displaying the depth image as well, as long as depth image and the AR background image have the same aspect ratio. The standard approach of acquiring the depth image using AR Foundation, is to access the When using Lightship, we provide the In this how-to we will show both the traditional way and Lightship's approach of accessing and displaying the depth texture on full screen. This how-to covers:

|

Prerequisites

You will need a Unity project with ARDK installed and a set-up basic AR scene. For more information, see Installing ARDK 3 and Setting up an AR Scene. Niantic also recommends that you set up Playback to be able to test in the Unity editor.

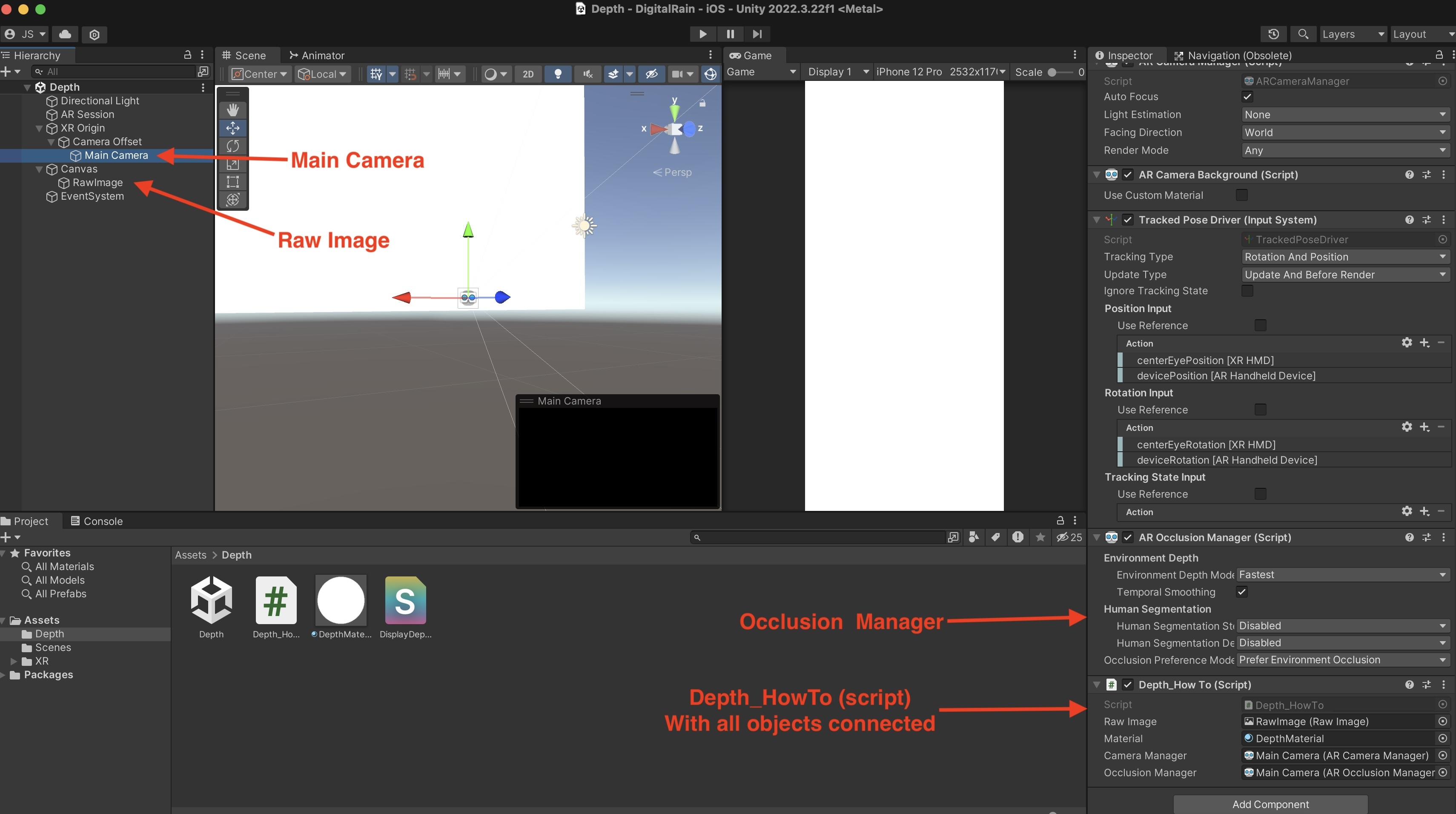

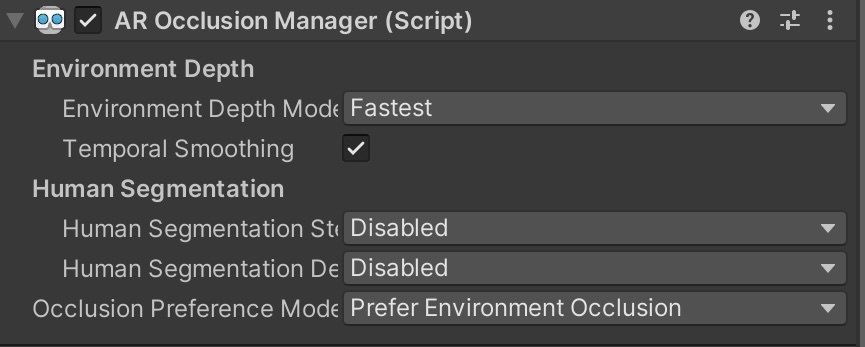

Adding the AR Occlusion Manager

In ARFoundation, the AR Occlusion Manager MonoBehaviour provides access to the depth buffer. To add an AR Occlusion Manager to your project:

- Add an

AROcclusionManagerto your Main CameraGameObject:- In the Hierarchy, expand the

XROriginand Camera Offset, then select the Main Camera object. Then, in the Inspector, click Add Component and add anAROcclusionManager.

- In the Hierarchy, expand the

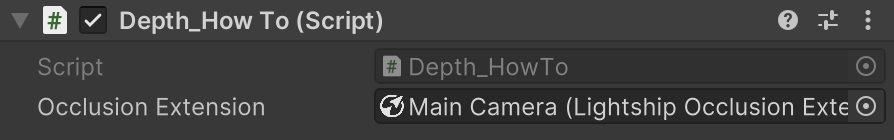

Adding the Lightship Occlusion Extension

To add the extension:

- Add a

LightshipOcclusionExtensionto the Main CameraGameObject.- In the Hierarchy, expand the

XROriginand select the Main Camera. Then, in the Inspector, click Add Component and add aLightship Occlusion Extension.

- In the Hierarchy, expand the

Accessing the Depth Texture From the Occlusion Manager (Approach A)

To access the depth texture in code, you will need a script that polls the camera and the Occlusion Manager:

- In the Project window, create a C# Script file in the Assets folder.

- Name it

Depth_HowTo. - Open

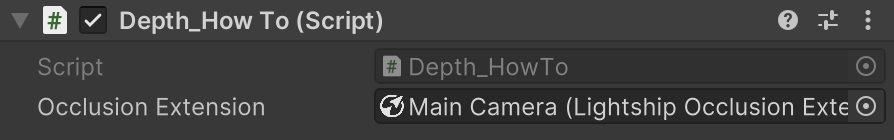

Depth_HowTo.csin a file editor, then add the code from the snippet after these steps. - Once the script is ready, select the Main Camera from the Hierarchy, then add it as a Component in the Inspector. Click the circle next to the Occlusion Manager field, then select the Main Camera.

Click to reveal the Depth How-to code

using UnityEngine;

using UnityEngine.XR.ARFoundation;

public class Depth_HowTo : MonoBehaviour

{

public AROcclusionManager _occlusionManager;

void Update()

{

if (!_occlusionManager.subsystem.running)

{

return;

}

var texture = _occlusionManager.environmentDepthTexture;

var displayMatrix = CameraMath.CalculateDisplayMatrix

(

texture.width,

texture.height,

Screen.width,

Screen.height,

XRDisplayContext.GetScreenOrientation()

);

// Do something with the texture

// ...

}

}

Script Walkthrough

- In this example, we acquire the depth image from AR Foundation's occlusion manager. This image has the same aspect ratio as the AR background image.

- We use Lightship's math library to calculate a display matrix that fits the depth image to the screen. We could also use the display matrix provided via the frame update callbacks of the camera manager, but the layout of that matrix would vary on different platforms.

Accessing the Depth Texture From the Lightship Occlusion Extension (Approach B)

To access the depth texture in code, you will need a script that polls the camera and the Occlusion Manager:

- In the Project window, create a C# Script file in the Assets folder.

- Name it

Depth_HowTo. - Open

Depth_HowTo.csin a file editor, then add the code from the snippet after these steps. - Once the script is ready, select the Main Camera from the Hierarchy, then add it as a Component in the Inspector. Click the circle next to the Camera Extension field, then select the Main Camera.

Click to reveal the Depth How-to code

using UnityEngine;

using UnityEngine.XR.ARFoundation;

public class Depth_HowTo : MonoBehaviour

{

public LightshipOcclusionExtension _occlusionExtension;

void Update()

{

var texture = _occlusionExtension.DepthTexture;

var displayMatrix = _occlusionExtension.DepthTransform;

// Do something with the texture

// ...

}

}

Script Walkthrough

- In this example, we acquire the depth image from Lightship's AR occlusion extension. The aspect ratio of this image is not guaranteed to match the AR background image.

- Besides the depth texture, the occlusion extension also provides an appropriate transformation matrix to display depth on screen. This matrix is different from a traditional display matrix, because it also contains warping to compensate for missing frames.

Adding a Raw Image to Display the Depth Buffer

By adding a raw image to the scene and attaching a material to it that transforms the depth buffer to align it with the screen, we can display live depth information.

To set up a live depth display:

- Right-click in the Hierarchy, then open the UI menu and select Raw Image.

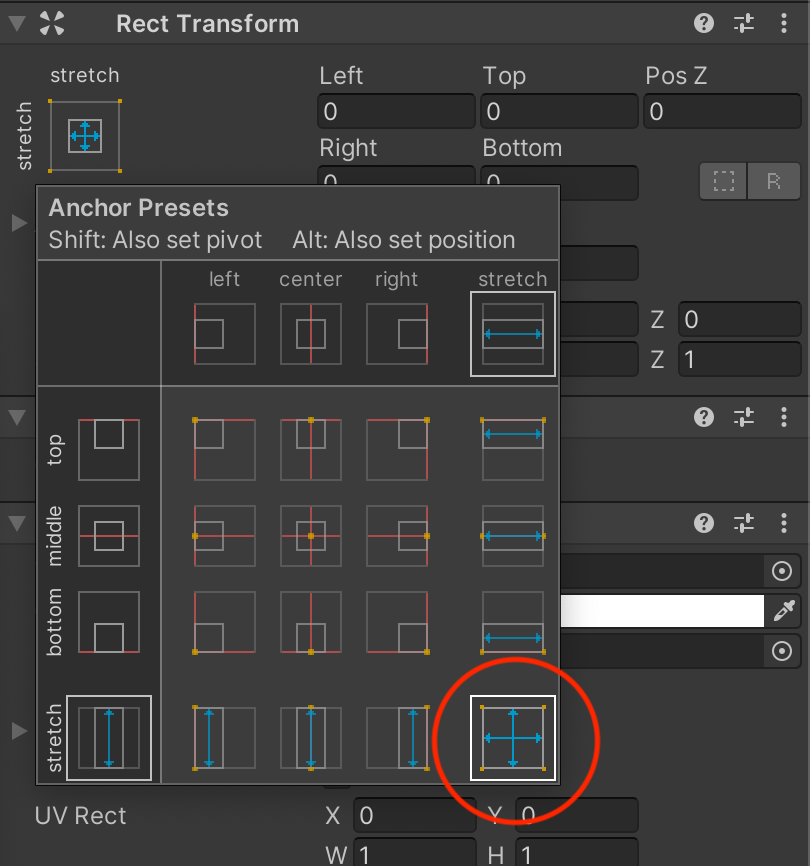

- Using the image transform tools, center the Raw Image and stretch it across the screen to make it visible later (see image below).

- Set all parameters (Left, Top, Z, Right, Bottom) to 0.

Adding a Material and Shader

To create a material and shader:

- In the Assets window, right-click, then mouse over Create and select Material. Name it DepthMaterial.

- Repeat this process, but open the Shader menu and select Unlit Shader. Name the new shader DisplayDepth.

- Drag the shader onto the material to connect them.

- Add the code from DisplayDepth Shader Code to the DisplayDepth shader.

DisplayDepth Shader Code

The depth display is a standard full-screen shader that uses vert/frag sections. In the vert section of the shader, we multiply the UVs by the display transform to sample the texture. This provides the transform that aligns the depth to the screen.

Click to reveal the DepthDisplay shader

Shader "Unlit/DisplayDepth"

{

Properties

{

_DepthTex ("_DepthTex", 2D) = "green" {}

}

SubShader

{

Tags {"Queue"="Transparent" "IgnoreProjector"="True" "RenderType"="Transparent"}

Blend SrcAlpha OneMinusSrcAlpha

Cull Off ZWrite Off ZTest Always

Pass

{

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#include "UnityCG.cginc"

struct appdata

{

float4 vertex : POSITION;

float2 uv : TEXCOORD0;

};

struct v2f

{

float3 texcoord : TEXCOORD0;

float4 vertex : SV_POSITION;

};

// Sampler for the depth texture

sampler2D _DepthTex;

// Transform from screen space to depth texture space

float4x4 _DepthTransform;

inline float ConvertDistanceToDepth(float d)

{

// Clip any distances smaller than the near clip plane, and compute the depth value from the distance.

return (d < _ProjectionParams.y) ? 0.0f : ((1.0f / _ZBufferParams.z) * ((1.0f / d) - _ZBufferParams.w));

}

v2f vert (appdata v)

{

v2f o;

o.vertex = UnityObjectToClipPos(v.vertex);

// Apply the image transformation to the UV coordinates

o.texcoord = mul(_DepthTransform, float4(v.uv, 1.0f, 1.0f)).xyz;

return o;

}

fixed4 frag (v2f i) : SV_Target

{

// Since the depth image transform may contain reprojection (for warping)

// we need to convert the uv coordinates from homogeneous to cartesian

float2 depth_uv = float2(i.texcoord.x / i.texcoord.z, i.texcoord.y / i.texcoord.z);

// The depth value can be accessed by sampling the red channel

// The values in the texture are metric eye depth (distance from the camera)

float eyeDepth = tex2D(_DepthTex, depth_uv).r;

// Convert the eye depth to a z-buffer value

// The z-buffer value is a nonlinear value in the range [0, 1]

float depth = ConvertDistanceToDepth(eyeDepth);

// Use the z-value as color

#ifdef UNITY_REVERSED_Z

return fixed4(depth, depth, depth, 1.0f);

#else

return fixed4(1.0f - depth, 1.0f - depth, 1.0f - depth, 1.0f);

#endif

}

ENDCG

}

}

}

Passing the Depth to the Raw Image

To pass depth information to the raw image in the script:

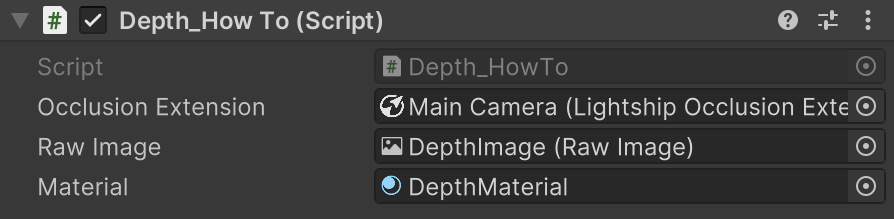

- Update the script to take in a

RawImage andMaterial`. - Set the depth texture and display transform for that

Material. - Add

SetTextureandSetMatrixto the script to pass depth and transform information to the shader. - Connect the

MaterialandRawImageto the script in Unity.

Click to reveal the updated DepthDisplay shader code

using UnityEngine.UI;

public class Depth_HowTo : MonoBehaviour

{

public RawImage _rawImage;

public Material _material;

...

void Update()

{

if (!_occlusionManager.subsystem.running)

{

return;

}

//add our material to the raw image

_rawImage.material = _material;

//set our variables in our shader

//NOTE: Updating the depth texture needs to happen in the Update() function

_rawImage.material.SetTexture("_DepthTex", _occlusionManager.environmentDepthTexture);

_rawImage.material.SetMatrix("_DisplayMat",_displayMat);

}

}

Testing the Setup and Building to Device

To connect all the pieces and test it out:

- In the Inspector, verify that the Main Camera has an Occlusion Manager and the

DepthScript(withMaterialandRawImageconfigured) attached to it. You should now be able to test using Playback in the Unity Editor. You can also open Build Settings and click Build and Run to build to a device and try it out.