Depth

ARDK Depth Estimation provides a per-pixel depth map of the real world, enabling applications to estimate the real-world distance (in meters) from the device camera to each pixel on the screen. This feature has a variety of use cases, including (but not limited to):

- Writing depth values into the zbuffer for occlusion.

- Placing objects in the world by fetching the 3-D position from the depth buffer.

- Constructing a mesh as part of the meshing system.

- Using visual effects such as depth of field to focus on nearby subjects.

This is an example of using the depth camera to create a 'pulse' that travels from near to far:

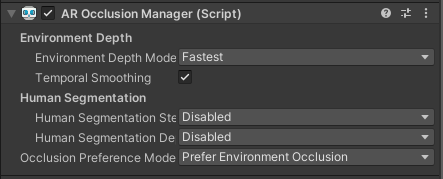

AR Occlusion Manager

ARDK 3.0 is integrated with Unity's AR Foundation Occlusion Subsystem (opens in new tab). When Lightship is enabled in XR Settings, we provide an implementation of the subsystem that leverages Lightship’s advanced depth. As a developer, all you have to do is place the standard AROcclusionManager (opens in new tab) in your scene.

While the interface of the AROcclusionManager is the same as AR Foundation's, the options do different things:

- Environment Depth Mode. In Lightship, this parameter allows you to choose between a couple of modified neural nework architectures:

- Medium: Estimates depth using our in-house multidepth architecture.

- Best: Estimates depth using a modified multidepth anti-flicker model which takes into account the depth map from the previous timestep.

- Fastest: Estimates depth using a smaller but potentially less accurate model.

- Temporal Smoothing: Not supported in Lightship.

- Human Segmentation: Lightship has a separate feature for segmentation of a larger set of items, including humans (see the Semantics feature page). This section of options is unused for Lightship, so you can leave them Disabled.

- Occlusion Preference Mode: This is normally used to switch between environment occlusion vs human occlusion. In Lightship, it is only used to turn occlusion off by switching this to No Occlusion.

Accessing Depth as a Texture

With the AROcclusionManager component in your scene, you can access the latest depth map as a GPU texture within a MonoBehaviour:

using ...

public class AccessDepthMap : MonoBehaviour

{

public AROcclusionManager _occlusionManager;

...

void Update()

{

if (!_occlusionManager.subsystem.running)

{

return;

}

...

var depthTexture = _occlusionManager.environmentDepthTexture;

...

}

...

}

Because of the neural network that Lightship depth estimation uses, the depthTexture surfaced from the occlusion manager here is rotated 90 degrees counterclockwise and has a fixed aspect ratio. An example input image is shown here (left) with its corresponding depth texture (right).

The Display Depth as a Shader How-To provides an example shader that rotates the image 90 degrees clockwise and transforms the output depth image to the proper aspect ratio using a display matrix.

Sampling the Depth Map on the CPU

With the AROcclusionManager component in your scene, you can sample the latest depth map within a MonoBehaviour. This snippet shows how to get the depth map on the CPU and find the depth at the approximate center of the screen.

using ...

public class SampleDepthMap : MonoBehaviour

{

public AROcclusionManager _occlusionManager;

private Matrix4x4 m_DisplayMatrix;

XRCpuImage? depthimage;

void Update()

{

if (!_occlusionManager.subsystem.running)

{

return;

}

if (_occlusionManager.TryAcquireEnvironmentDepthCpuImage(out

var image))

{

depthimage?.Dispose();

depthimage = image;

}

else

{

return;

}

var uvCenter = new Vector2(0.5, 0.5); // screen center

var depthCenter = depthimage.Value.Sample<float>(uv, m_DisplayMatrix);

}

}

}

Again, here depthImage is the raw output from Lightship (rotated 90 degrees counterclockwise, fixed aspect ratio). The Convert a Point to a World Position Using Depth How-To shows how to get the depth map on CPU and sample it using the camera's display matrix.

Learn more

You can see how to use depth in our depth-related How-tos:

You can also look at our depth-related samples: