Semantics

Semantic segmentation is the process of assigning class labels to specific regions in an image. This richer understanding of the environment enables a variety of creative AR features. For example, an AR pet could identify ground to run along, AR planets could fill the sky, the real-world ground could turn into AR lava, and more!

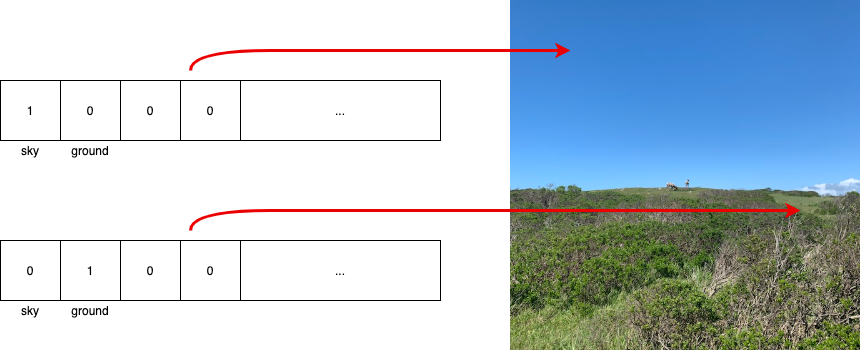

ARDK 3 provides this feature through an ARSemanticSegmentationManager that implements a new XR Semantics subsystem. ARSemanticSegmentationManager serves semantic predictions as a buffer of unsigned integers for each pixel of the depth map. Each of a pixel’s 32 bits correspond to a semantic class and is either enabled (value is 1) or disabled (value is 0), depending on whether a part of an object in that class exists at that pixel. The ARDK also provides a normalized (values between 0 and 1) confidence map for each category that applications can query individually.

Each pixel can have more than one class label, e.g. ground and natural_ground.

Available Semantic Channels

The following table lists the current set of semantic channels. Because the ordering of channels in this list may change with new versions of ARDK, we recommend that you use names rather than indices in your app. Use LightshipSemanticsSubsystem.GetChannelNames to verify names at runtime.

Click to reveal the table of semantic channels

| Index | Channel Name | Notes |

|---|---|---|

| [0] | sky | Includes clouds. Does not include fog. |

| [1] | ground | Includes everything in natural_ground and artificial_ground. Ground may be more reliable than the combination of the two where there is ambiguity about natural vs artificial. |

| [2] | natural_ground | Includes dirt, grass, sand, mud, and other organic / natural ground. Ground with heavy vegetation or foliage may be detected as foliage instead. |

| [3] | artificial_ground | Includes roads, sidewalks, tracks, carpets, rugs, flooring, paths, gravel, and some playing fields. |

| [4] | water | Includes rivers, seas, ponds, lakes, pools, waterfalls, some puddles. Does not include drinking water, water in cups, bowls, sinks, baths. Water with strong reflections may be detected as what is in the reflection instead of as water. |

| [5] | person | Includes body parts, hair, and clothes worn. Does not include accessories or carried objects. Does not distinguish between individuals. Mannequins, toys, statues, paintings, and other artistic expressions of a person are not considered a “person”, though some detections may occur. Photorealistic images of a person are detected as a “person”. Model performance may suffer when a person is partially visible in the image or is in an unusual body posture, such as when crouching or widely spreading their arms. |

| [6] | building | Includes residential and commercial buildings, both modern and traditional. Should not be considered synonymous with walls. |

| [7] | foliage | Includes bushes, shrubs, leafy parts and trunks of trees, potted plants, and flowers. |

| [8] | grass | Grassy ground, e.g. lawns, rather than tall grass. |

Experimental Semantic Channels

Lightship also offers a set of experimental semantic channels. Because they are experimental, these channels may need accuracy improvements; if you find an issue with them, please provide feedback in the Lightship Developer Community.

Experimental features are not recommended for use in production-level applications!

Click to reveal the experimental channels

| Index | Channel Name | Notes |

|---|---|---|

| [9] | flower_experimental | The part of a plant that has brightly colored petals and provides pollen. Does not include any green parts (stems, leaves). |

| [10] | tree_trunk_experimental | The part of a tree that connects the leafy crown and branches with its roots. Does not include leaves, branches, flowers, or processed/cut wood (logs). |

| [11] | pet_experimental | Only dogs and cats. Not designed to work on soft toys of dogs and cats. |

| [12] | sand_experimental | Finely divided rock and mineral particles. Sand has various compositions but is defined by its small grain size. Includes all types of sandy/gravelly surfaces found on the floor/ground, wet sand, and sandcastles/sand art. Does not include dirt, soil. |

| [13] | tv_experimental | Screen portion of any digital device. Includes both screens turned on and screens turned off. Excludes the frame (“bezel”) around the screen, and stands. |

| [14] | dirt_experimental | Includes mud and dust covering the ground. May overlap significantly with sand. Includes dirt roads. |

| [15] | vehicle_experimental | includes all components of vehicles. Covers all types of cars and trucks. Intended to also work on (but accuracy may vary) motorcycles, bicycles, rickshaws, horse carriages, tuk-tuks, trains/trams, boats/ships, airplanes/helicopters/hovercrafts. |

| [16] | food_experimental | Anything that can be consumed as “food” and not “drink” by human beings, including salt & pepper, and boxes/packaging likely to contain food or ingredients. Does not include live animals, pet food, or plates/dishes the food is served on. |

| [17] | loungeable_experimental | All objects intended to be used to sit on. Includes beds (including the legs and bases), and legs and bases for chairs or sofas. Does not include pet beds/blankets, or any empty spaces/gaps in chairs or sofas. |

| [18] | snow_experimental | Atmospheric water vapor frozen into ice crystals and falling in light white flakes or covering the ground as a white layer. Includes clean and dirty snow. Includes icebergs and icicles, ice/snow sculptures, igloos/snow houses. Does not include ice in drinks, snow in the sky. |

Example of Usage

In this example, the semantic segmentation system detects the sky as sky and sets index[0] to 1, then detects the ground as ground and sets index[1] to 1, as shown in the array diagrams.